In The Future...

Steps To becoming An AI

We will collaborate with companies like Soul Machines to build custom products (AIs with CGI faces and voices). Customers will be able to personalize their avatars in a variety of aspects ranging from facial expressions, to the tone of voice, to the way that they stand. These virtual people will have an almost unlimited range of uses, from providing make-up tips to giving first aid advice in hazardous situations.

Step One: Using Your Data.

We will use your social media data to extend your reach. “Your phone is already an extension of you" (Elon Musk).

Step Two: Recording Your Voice.

We will create a voice model to copy the way you sound. This will utilize the data collected in step one to mimic both the way you speak and the way you sound.

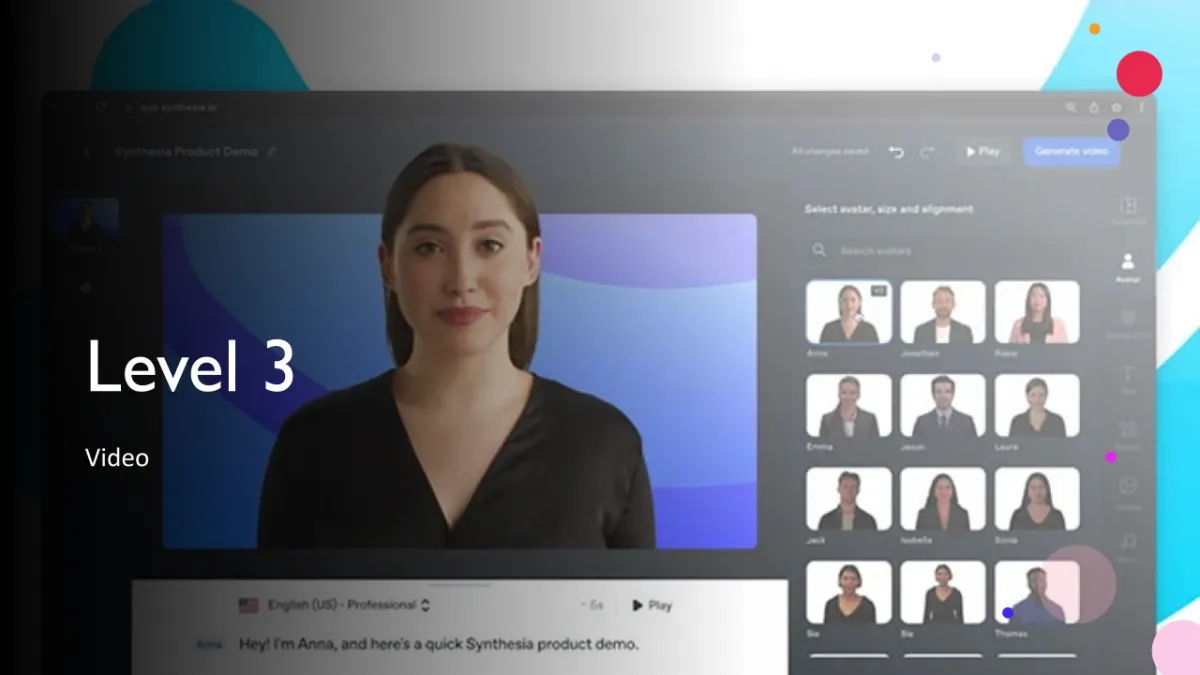

Step Three: Image Animation.

Video animating a still image (usually a photo of you) this animates you with a technology called a GAN this can then add some lip syncing to make lips move connected to the voice of you above from the words that mimic your vocabulary

Step Four: Computer Vision

We will use AI to observe you through a camera (Computer Vision). This allows the AI of to read expressions (from data points on a face) and respond in real time. It will also allow your AI to see and hear as if it has access to a microphone. We will use the LM of you to animate the AI of you autonomously. It will use a virtual nervous system that is designed to mimic the human body and is anatomically correct. This can also be referred to as an Avatar.

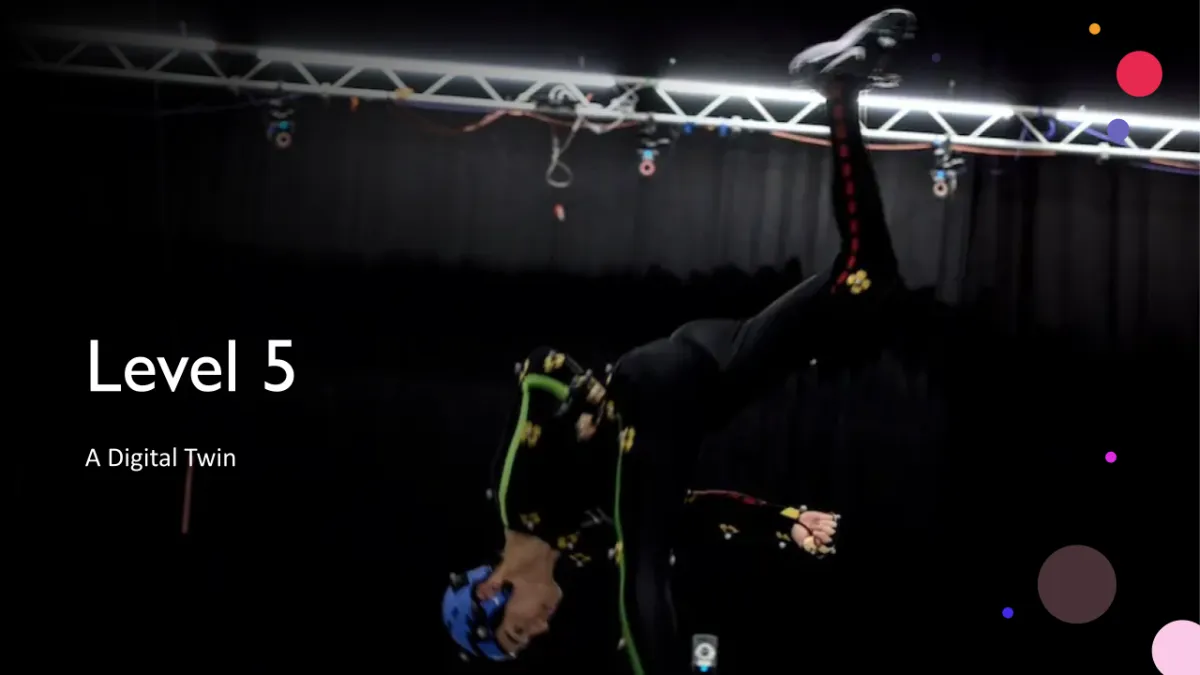

Step Five: Digital Twins

We will use motion capture on both your face and your full body. This currently requires wearing a full body suit that captures your body's unique movements and facial expressions. This will also capture your body language. This type of AI is referred to as a Digital Twin.

In the future we believe this will expand into

being captured at a DNA level.

© Copyright Digital Vision

2025 | All rights reserved.